Some benchmarking

Hello.

Recently, thanks to the surprisingly helpful Unhelpful, also known as Andrew Mahone, we have a decent, if slightly arbitrary, set of performances graphs. It contains a couple of benchmarks already seen on this blog as well as some taken from The Great Computer Language Benchmarks Game. These benchmarks don't even try to represent "real applications" as they're mostly small algorithmic benchmarks. Interpreters used:

- PyPy trunk, revision 69331 with --translation-backendopt-storesink, which is now on by default

- Unladen swallow trunk, r900

- CPython 2.6.2 release

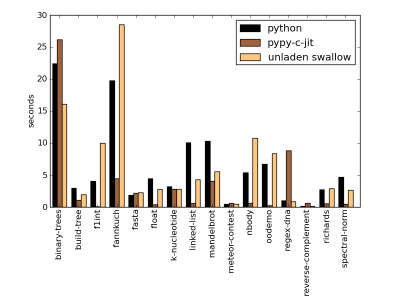

Here are the graphs; the benchmarks and the runner script are available

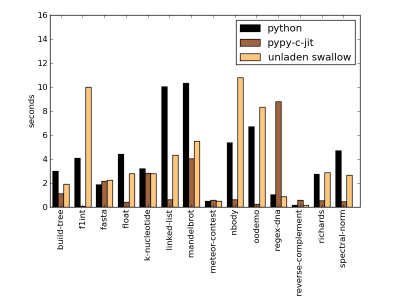

And zoomed in for all benchmarks except binary-trees and fannkuch.

And zoomed in for all benchmarks except binary-trees and fannkuch.

As we can see, PyPy is generally somewhere between the same speed as CPython to 50x faster (f1int). The places where we're the same speed as CPython are places where we know we have problems - for example generators are not sped up by the JIT and they require some work (although not as much by far as generators & Psyco :-). The glaring inefficiency is in the regex-dna benchmark. This one clearly demonstrates that our regular expression engine is really, really, bad and urgently requires attention.

The cool thing here is, that although these benchmarks might not represent typical python applications, they're not uninteresting. They show that algorithmic code does not need to be far slower in Python than in C, so using PyPy one need not worry about algorithmic code being dramatically slow. As many readers would agree, that kills yet another usage of C in our lives :-)

Cheers,fijal

Comments

Wow! This is getting really interesting. Congratulations!

By the way, it would be great if you include psyco in future graphs, so speed junkies can have a clearer picture of pypy's progress.

Very interesting, congratulations on all the recent progress! It would be very interesting to see how PyPy stacks up against Unladen Swallow on Unladen Swallow's own performance benchmark tests, which do include a bit more real-world scenarios.

@Eric: yes, definitely, we're approaching that set of benchmarks

@Luis: yes, definitely, will try to update tomorrow, sorry.

It's good, but...

We are still in the realms of micro-benchmarks. It would be good to compare their performances when working on something larger. Django or Zope maybe?

These last months, you seem to have had almost exponential progress. I guess all those years of research are finally paying off. Congratulations!

Also, another graph for memory pressure would be nice to have. Unladen Shadow is (was?) not very good in that area, and I wonder how PyPy compares.

[nitpick warning]

As a general rule, when mentioning trunk revisions, it's nice to also mention a date so that people know the test was fair. People assume it's from the day you did the tests, and confirming that would be nice.

[/nitpick warning]

How about benchmarking against CPython trunk as well?

cheers

Antoine.

What about memory consumption? That is almost as important to me as speed.

Congratulations !

Please could you remember us how to build and test pypy-jit ?

I'm curious why mandelbrot is much less accelerated than, say, nbody. Does PyPy not JIT complex numbers properly yet?

@wilk ./translate.py -Ojit targetpypystandalone.py

@Anon Our array module is in pure Python and much less optimized than CPython's.

How long until I can do

pypy-c-jit translate.py -Ojit targetpypystandalone.py

?

So far, when I try, I get

NameError: global name 'W_NoneObject' is not defined

https://paste.pocoo.org/show/151829/

ASFAIU it's not PyPy's regex engine being "bad" but rather the fact that the JIT generator cannot consider and optimize the loop in the regex engine, as it is a nested loop (the outer one being the bytecode interpretation one).

@holger: yes, that explains why regexps are not faster in PyPy, but not why they are 5x or 10x slower. Of course our regexp engine is terribly bad. We should have at least a performance similar to CPython.

Benjamin, is it really an issue with array? The inner loop just does complex arithmetic. --Anon

@Anon I'm only guessing. Our math is awfully fast.

@Anon, @Benjamin

I've just noticed that W_ComplexObject in objspace/std/complexobject.py is not marked as _immutable_=True (as it is e.g. W_IntObject), so it is totally possible that the JIT is not able to optimize math with complexes as it does with ints and floats. We should look into it, it is probably easy to discover

guys, sorry, who cares about *seconds*??

why didn't you normalize to the test winners? :)

So, um, has anyone managed to get JIT-ed pypy to compile itself?

When I tried to do this today, I got this:

https://paste.pocoo.org/show/151829/

@Leo:

yes, we know that bug. Armin is fixing it right now on faster-raise branch.

antonio: good point. On the second thought, though, it's not a *really* good point because we don't have _immutable_=True on floats either...

@Maciej Great! It'll be awesome to have a (hopefully much faster??) JITted build ... it currently takes my computer more than an hour ...

@Leo it's likely to take tons of memory, though.

Would perhaps also be nice to compare the performance with one the current Javascript-Engines(V8, SquirrelFish etc.)

Nice comparisons - and micro-performance looking good. Congratulations.

HOWEVER - there is no value in having three columns for each benchmark. The overall time is arbitrary, all that matters is relative so you might as well normalise all graphs to CPython = 1.0, for example. The relevant informtion is then easier to see!

it's called "The Computer Language

Benchmarks Game" these days...

Tom is right, normalizing the graphs to cpython = 1.0 would make them much more readable.

Anyway, this is a very good Job from Unhelpful.

Thanks!

Do any of those benchmarks work with shedskin?

glad to see someone did something with my language shootout benchmark comment ;)

I checked https://www.looking-glass.us/~chshrcat/python-benchmarks/results.txt but it doesn't have the data for unladen swallow. Where are the number?

I'm curious why mandelbrot is much less accelerated than, say, nbody. Does PyPy not JIT complex numbers properly yet?